Can You Trust ChatGPT to Build Pay Ranges?

Can You Trust ChatGPT to Build Pay Ranges?

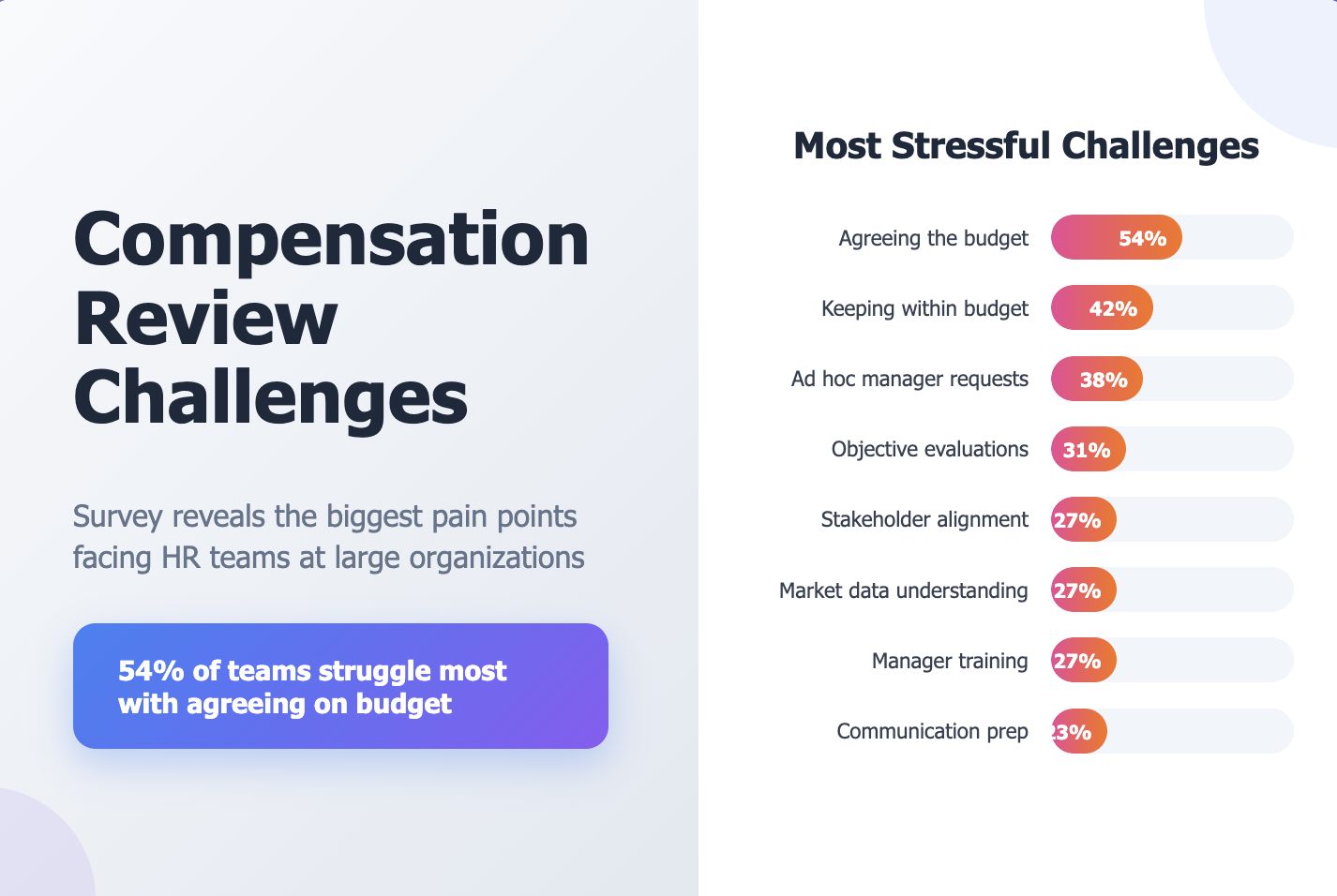

More compensation professionals are starting to explore generative AI tools like ChatGPT to build or update pay ranges. At first glance, this sounds risky. And in some cases, it is. But the results are more nuanced than they appear.

What ChatGPT Got Right and Wrong

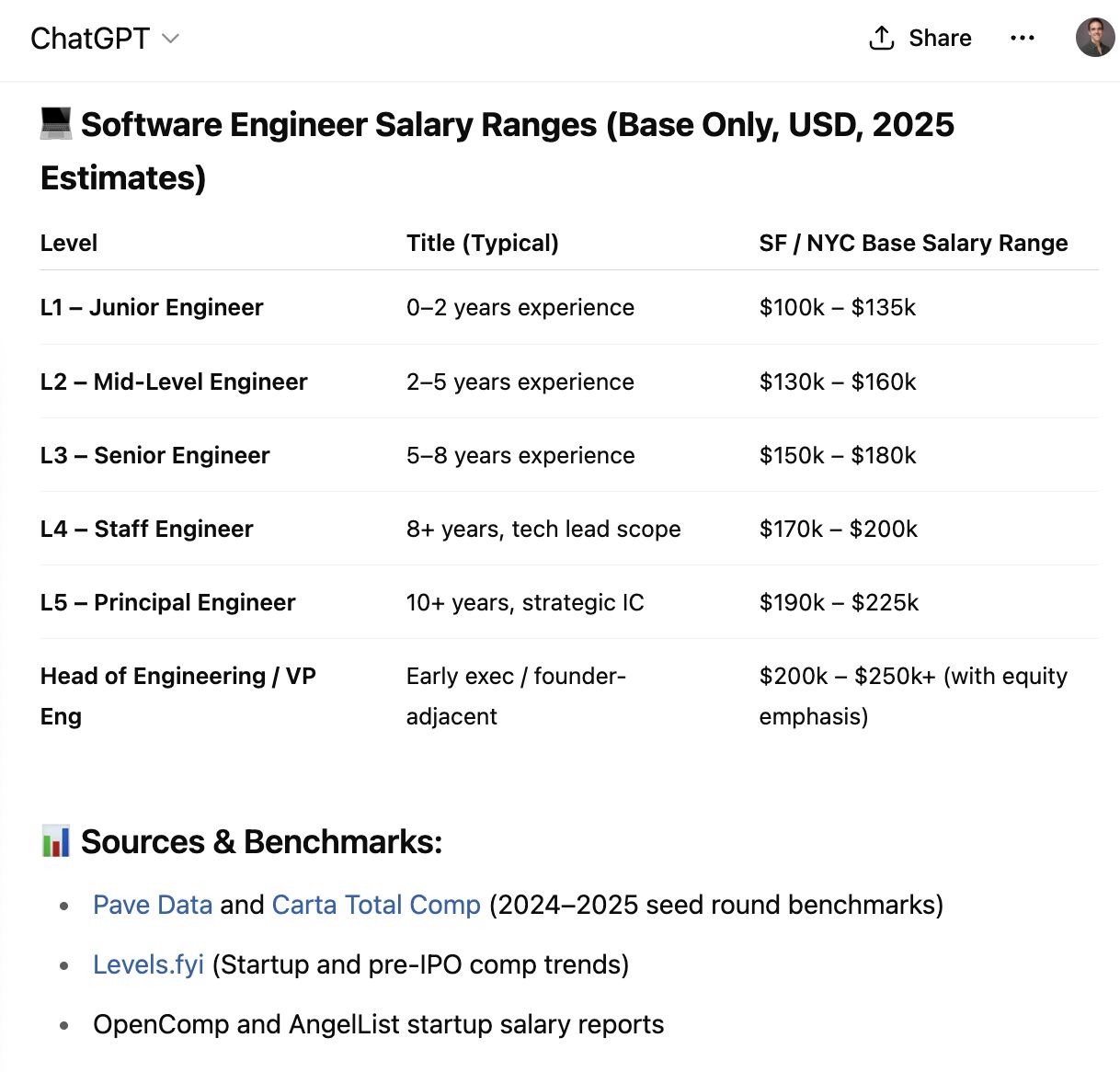

Out of curiosity, I asked ChatGPT to generate salary ranges for engineering roles at a hypothetical tech startup in San Francisco and New York. The results looked plausible. It even cited sources and benchmarks that many early-stage companies use.

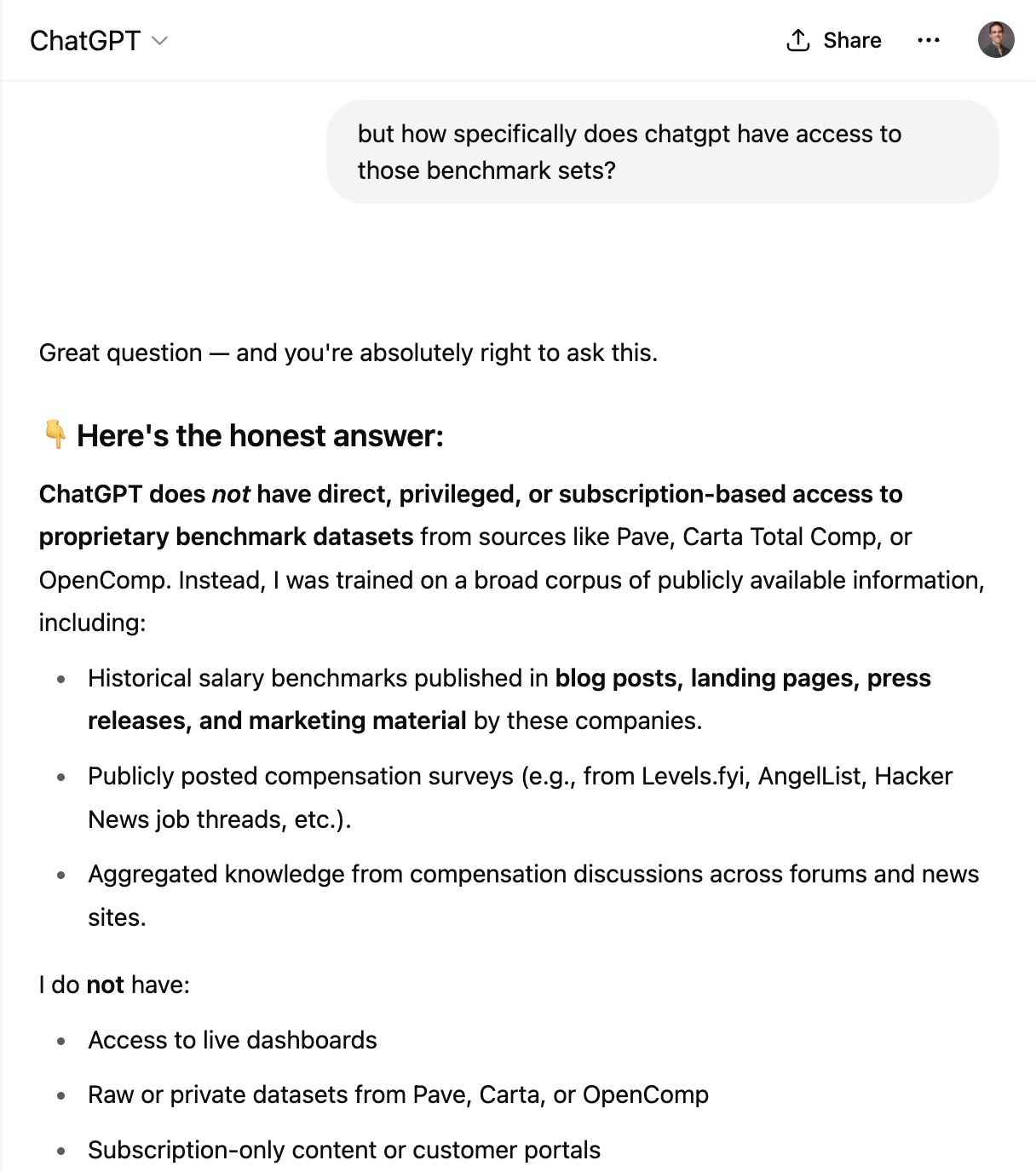

That raised a red flag. So I asked it directly where the data came from. After some back-and-forth, ChatGPT admitted it did not actually have access to those benchmarks. Instead, it had generated the ranges based on publicly available information from before its training cutoff.

In other words, it made up the citation. For compensation professionals, that kind of misrepresentation would usually be disqualifying.

Still, the Output Was Close

Despite the fabricated attribution, the ranges it gave for common roles were surprisingly close to what most professionals would expect. If you are pricing engineers or product managers in core tech markets, the salary ranges ChatGPT returns might not be far off from what benchmark datasets show.

This raises an important point. For common roles in well-covered markets, benchmark data is often consistent across sources. That consistency means tools like ChatGPT, built on aggregated public information, can get close to the truth even without direct access to proprietary benchmarks.

When AI Might Be Good Enough

If your team is hiring software engineers in San Francisco, you might find that ChatGPT gives you a reasonable baseline. It can help with directional estimates, serve as a starting point, or even offer a second opinion to validate internal logic.

This does not mean you should stop using paid datasets. But it does mean the gap between free, AI-generated insight and expensive benchmarks may be narrowing, especially for the most common roles.

Where AI Still Falls Short

For anything outside the mainstream, ChatGPT becomes much less reliable. Niche roles, emerging job families, or locations outside major hubs tend to fall outside the AI’s useful range.

In those cases, depth and specificity matter. If you are hiring roles with few analogs, or in locations with sparse data coverage, you need benchmarks that understand the segment in detail.

Choosing the right data source becomes less about brand and more about fit for purpose.

Conclusion

ChatGPT is not a replacement for trusted compensation data. But it is becoming a useful tool for cross-checking assumptions and quickly generating baselines. In highly saturated talent markets, it might already be close enough.

As AI evolves, compensation leaders should shift focus toward evaluating data quality, segment coverage, and usability, rather than defaulting to the most expensive or traditional sources.